How to Run an LLM Locally: A Witty Guide to Ollama & Open WebUI Mastery

Run an LLM locally with Ollama & Open WebUI! Witty guide to setup, APIs, & alternatives like LM Studio.

Ever fantasized about wielding your own “Chat-GPT” on your laptop, free from cloud subscriptions? Not just flirting with APIs like a tech tourist, but plunging into AI's raw, electric soul? You’re my kind of rebel. My inbox’s been buzzing with readers begging for a no-jargon guide to running an LLM locally, and I’m here to serve it up, Neurally Intense style.

Over a frothy flat white, I grilled a startup CTO a few weeks back about their much-hyped “AI-powered” features. “What’s the stack? What’s the vibe?” I pressed. His answer? They’re still “exploring AI possibilities.” Translation: they’re lost in the buzzword swamp, dodging hype-vines. My advice? Ditch the webinars and get hands-on. Test LLMs via APIs, sure, but the real alchemy happens when you run a large language model locally or on a VPS. That’s how you morph from curious to commanding.

This guide is your roadmap to running an LLM locally with Ollama and Open WebUI, two open-source titans that make AI accessible and downright sexy. I’ll also sprinkle in API magic with LiteLLM and OpenAI for extra zest. Craving a cloud-based sequel? Ping me on X @MathanNeurally. Let’s spark this AI revolution.

Why Run an LLM Locally?

Before we dive into the how, let’s tackle the why. Running an LLM locally is like brewing your coffee instead of grabbing a $7 latte. It’s cost-free (no API fees), private (your data stays home), and customizable (tweak models to your heart’s content). Plus, it’s a flex—nothing says “I’m a tech badass” like spinning up LLaMA on your rig. Whether you’re a developer, AI hobbyist, or startup CTO, local LLMs let you experiment without Big Tech’s guardrails.

How to Run an LLM Locally with Ollama

First, you need a tool that runs LLMs without turning your laptop into a space heater. Enter Ollama, an open-source gem that’s as friendly as your favorite barista. It lets you download and run models like LLaMA, Mistral, or Gemma with minimal fuss, delivering AI straight to your desktop.

What You’ll Need:

- A solid machine (8GB RAM minimum, 16GB+ for swagger; GPU optional but dreamy).

- A BSD/Unix-based OS (MacOS, Linux) or Windows with WSL2. Windows warriors, I’ve got you covered.

- A pinch of curiosity and a hunger for AI supremacy.

Installing Ollama:

- Snag the goods: Visit ollama.ai for the installer. MacOS/Linux users, it’s a breeze. Windows users, ensure WSL2 is enabled (Google “enable WSL2” for a quick win).

- Run the command: Open a terminal and paste:

curl -fsSL https://ollama.ai/install.sh | shWindows/WSL2 users, use this in your WSL2 terminal.

- Check the pulse: Type

ollama --version. See a version number? You’re in business. If not, DM me on X, and we’ll debug over virtual espresso.

Pick Your Model:

Ollama offers a smorgasbord of models. Start with Gemma 3 (4B parameters), potent yet storage-friendly. To fetch it:

ollama pull gemma3:4bIt’s a few GB, so queue a podcast. Then test it:

ollama run gemma3:4b

Boom, you’re chatting with an AI. Toss it a curveball like, “What’s the secret to galactic domination?” (Spoiler: It’s not just Wi-Fi.)

Model Comparison:

| Model | Parameters | RAM Needed | Best For |

|---|---|---|---|

| Gemma 3 | 4B | 4-8GB | Balanced performance |

| Deepseek r-1 7B | 7B | 8-12GB+ | GPU-powered heavy lifting |

| Qwen-3 | 8B | 8-12GB | Balanced performance |

Pro Tip: Browse Ollama’s model library for options. Match your model to your hardware’s muscle.

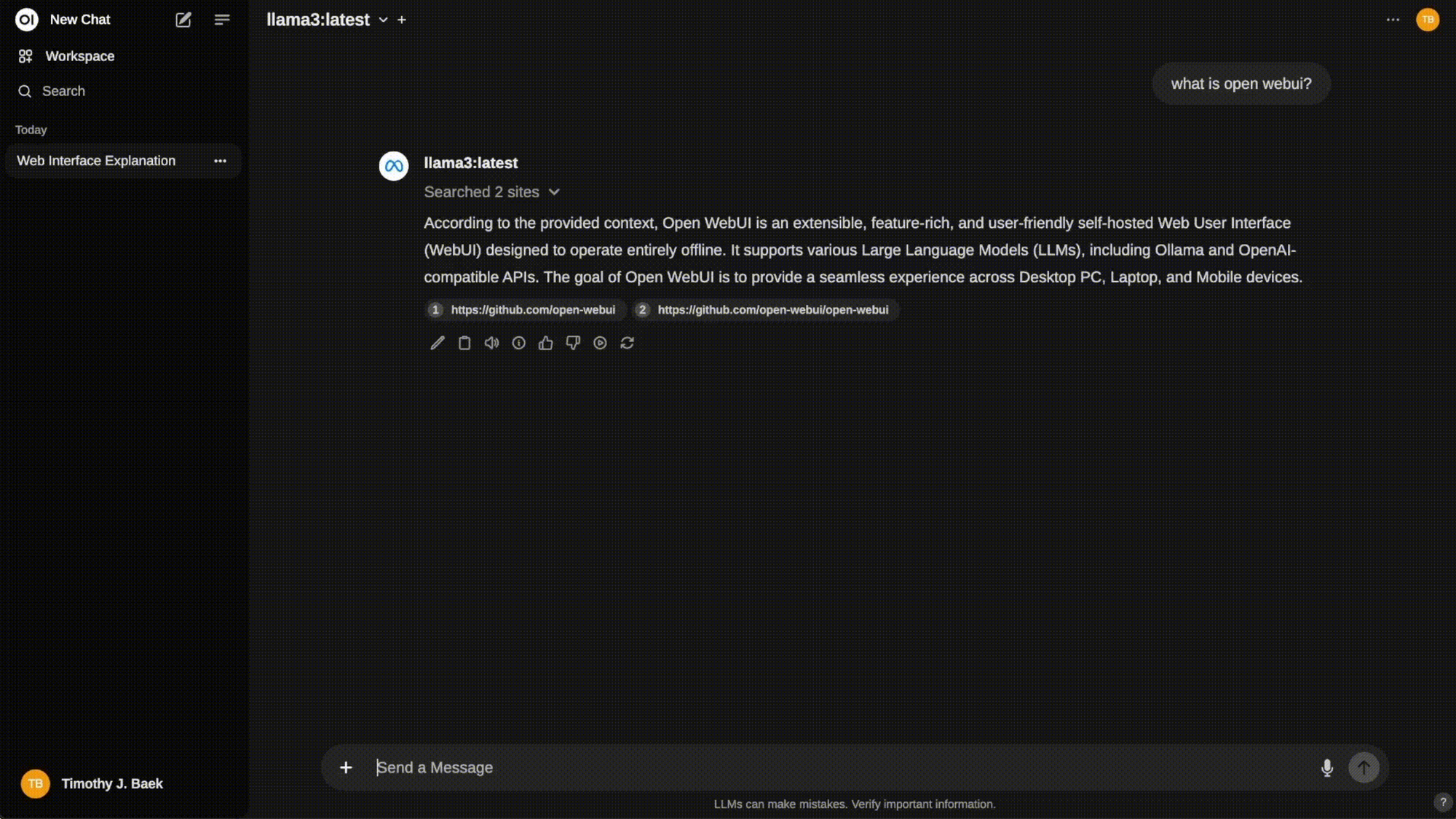

Open WebUI: Your AI’s Polished Persona

Terminal chats are gritty, but they’re like sipping artisanal coffee from a paper cup. Open WebUI is the open-source answer, draping your LLM in a sleek, ChatGPT-esque interface. It’s intuitive, customizable, and makes you feel like Tony Stark commanding JARVIS.

Open WebUI’s Superpowers:

- A browser-based chat UI smoother than your latte.

- Multi-model support (toggle LLaMA, Mistral, etc.).

- Personalization (themes, chat history).

- API integration for flexibility.

Installing Open WebUI:

- Get Docker: No Docker? Zip to docker.com, it’s like Lego for apps.

- Launch Open WebUI: Run:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainThis maps to localhost:3000.

- Link to Ollama: Start Ollama (

ollama serve). Open WebUI auto-detects it. - Go live: Visit

http://localhost:3000, sign in, and bask in your AI cockpit.

Now you’re chatting via a polished UI. Upload files, tweak settings, or roast your AI’s wit. It’s your sandbox.

Witty Aside: Docker feeling like a tech tantrum? Open WebUI’s GitHub has a manual install, but it’s like assembling IKEA furniture blindfolded.

Exploring Other Chat Front-Ends: Beyond Open WebUI

Open WebUI is the belle of the ball, but it’s not the only dance partner for your local LLM. The open-source world is bursting with chat front-ends, each with its own flair, from minimalist to feature-packed. I’ve curated a spicy list of alternatives to tickle your tech fancy. Pick one that vibes with your style, or mix and match to keep your AI adventures fresh. Here’s the lineup:

- LM Studio: A sleek, all-in-one platform for running LLMs with a user-friendly interface. Perfect for beginners craving simplicity without Docker’s wrestling match. (lmstudio.ai)

- AnythingLLM: A lightweight, customizable front-end that plays nice with Ollama. It’s like Open WebUI’s scrappy cousin, ideal for quick setups and document chats. (GitHub)

- ChatUI (Hugging Face): A minimalist gem from Hugging Face. Bare-bones but tweakable, perfect for coders who love tinkering. (GitHub)

- oobabooga’s text-generation-webui: A powerhouse for advanced users, offering deep customization. It’s like assembling a spaceship, but the results are stellar. (GitHub)

Each brings unique flavor, whether it’s ease, customization, or power. Experiment, break things, and find your match. Got a favorite? Drop it in the comments or DM me on X (@MathanNeurally), I’m all ears over virtual coffee.

API Alchemy: LiteLLM and OpenAI Integration

Ready to crank the intensity? Add API connectivity to juggle local and cloud models. LiteLLM and OpenAI API are your secret sauces.

LiteLLM: The Universal Translator

LiteLLM unifies LLM APIs into one format. It’s like teaching your AIs fluent tech.

- Install LiteLLM:

pip install litellm- Start the proxy:

litellm --model ollama/gemma3:4bThis exposes your Ollama model at http://localhost:8000.

- Connect Open WebUI: Add

http://localhost:8000as a custom API endpoint.

OpenAI API: The Premium Flex

Got an OpenAI API key? (It’s a paid splurge, worth it.) Here’s how:

- Grab your key from platform.openai.com.

- In Open WebUI, go to Settings, API Keys, paste it.

- Select “OpenAI” to vibe with GPT-4 alongside LLaMA.

Why It’s Hot: APIs let you mix and match. Run LLaMA for free, tap GPT-4 for heavy queries. Bicycle for commutes, Ferrari for joyrides.

Troubleshooting Common Hiccups

- Ollama won’t start: Check RAM (close Chrome’s tabs), reinstall via curl.

- Docker errors: Ensure Docker Desktop runs, ports are free (docker ps -a).

- Model too slow: Switch to Phi-2 or add RAM.

- WebUI not connecting: Verify Ollama (ollama serve), clear browser cache.

Pro Tips for LLM Mastery

- Optimize storage: Models are chunky, keep one or two active with

ollama rm <model>. - Experiment with prompts: Try “Explain quantum physics like a pirate” for fun.

- Monitor resources: Use

htop(Linux/Mac) or Task Manager (Windows). - Stay updated: Check Ollama and Open WebUI GitHubs for new features.

FAQ

Can I run Ollama on a low-spec laptop?

Yes, stick to Phi-2, temper expectations.

Is Open WebUI free?

Absolutely, open-source, zero costs unless you add paid APIs.

Local vs. cloud LLMs?

Local: private, free. Cloud: powerful, costly. Hybrid rules, cloud guide coming!

Can I fine-tune models locally?

Tricky with Ollama, start with pre-trained models.

The Neurally Intense Takeaway

Running an LLM locally isn’t a tech trick, it’s a rebellion against black-box AI. You’re forging your own brain. Ollama, Open WebUI, and alternatives like LM Studio make it accessible, while LiteLLM and OpenAI APIs give you wings. Leap from “AI-curious” to “AI-competent.”

Don’t just install, experiment. Break things. Ask absurd questions or see if your LLM can outwrite me (good luck). The tech is as bold as you are. Be bold.

Loved this guide? Share it on X or LinkedIn to spread the AI insight! Questions or craving the cloud guide? Hit me up on X (@MathanNeurally). I’m not Yoda, but I’ll guide you to the AI Force.

Stay Curious | Stay Bold | Stay Unapologetically You.